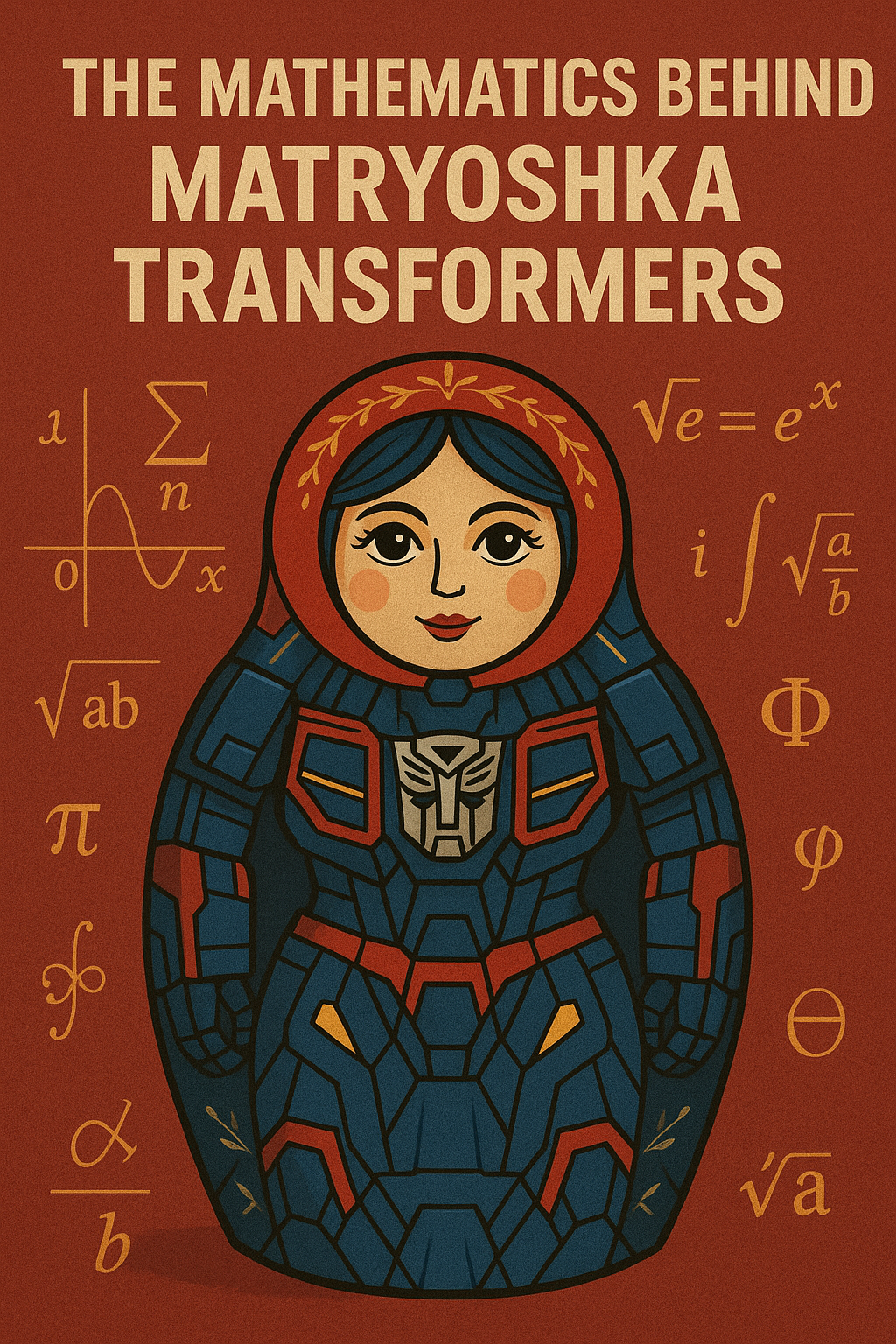

The Mathematics Behind Matryoshka Transformers

Introduction

Matryoshka Transformers represent a significant advancement in adaptive neural network architectures, inspired by the Russian nesting dolls (Matryoshka dolls) where smaller models are nested within larger ones. This architecture enables dynamic inference with variable computational costs while maintaining high performance across different resource constraints.

Core Mathematical Framework

1. Nested Representation Learning

The fundamental principle of Matryoshka Transformers lies in learning nested representations where smaller models are subsets of larger ones. Given a transformer with hidden dimension \(d\), we define a sequence of nested dimensions:

\[ d_1 < d_2 < d_3 < \ldots < d_k = d \]

For each layer \(l\) and nesting level \(i\), the hidden state \(h^{(l,i)}\) is defined as:

\[ h^{(l,i)} = h^{(l)}[:d_i] \]

where \(h^{(l)}[:d_i]\) represents the first \(d_i\) dimensions of the full hidden state \(h^{(l)}\) .

2. Multi-Scale Attention Mechanism

The attention mechanism is modified to operate across multiple scales simultaneously. For a given layer, the multi-scale attention is computed as:

\[ \text{MultiScaleAttention}(Q, K, V) = \text{Concat}(\text{head}_1, \text{head}_2, \ldots, \text{head}_k) \]

where each head \(\text{head}_i\) operates on the nested representation of dimension \(d_i\):

\[ \text{head}_i = \text{Attention}(Q[:d_i], K[:d_i], V[:d_i]) \]

The attention weights are computed using the scaled dot-product mechanism:

\[ \text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^\top}{\sqrt{d_k}}\right)V \]

3. Nested Loss Function

The training objective incorporates losses at multiple scales to ensure that smaller nested models perform well independently. The total loss is:

\[ \mathcal{L}_{\text{total}} = \sum_{i=1}^k \alpha_i \cdot \mathcal{L}(f_i(x), y) \]

where:

- \(f_i(x)\) is the prediction using the first \(d_i\) dimensions

- \(\mathcal{L}(f_i(x), y)\) is the task-specific loss (e.g., cross-entropy)

- \(\alpha_i\) are weighting coefficients that balance the importance of different scales

4. Progressive Training Strategy

The training process follows a progressive strategy where smaller models are trained first, and larger models build upon them. The parameter update rule is:

\[ \theta_i^{(t+1)} = \theta_i^{(t)} - \eta \cdot \nabla_{\theta_i} \left[ \sum_{j=i}^k \alpha_j \cdot \mathcal{L}(f_j(x), y) \right] \]

This ensures that parameters contributing to smaller models receive gradients from all larger models that contain them.

Mathematical Properties

1. Representation Efficiency

The nested structure provides computational efficiency with a complexity reduction factor. For a model with \(n\) parameters and nesting levels with dimensions \([d_1, d_2, \ldots, d_k]\), the computational complexity for the smallest model is:

\[ O\left(n \cdot \frac{d_1}{d}\right) \quad \text{compared to} \quad O(n) \quad \text{for the full model} \]

2. Information Preservation

The mathematical guarantee of information preservation is achieved through the constraint that larger models must contain all information from smaller models. This is formalized as:

\[ I(Y; h^{(l,i)}) \leq I(Y; h^{(l,j)}) \quad \text{for } i < j \]

where \(I(\cdot\,;\,\cdot)\) denotes mutual information between the representation and target \(Y\).

3. Gradient Flow Analysis

The gradient flow through nested structures follows a hierarchical pattern. For parameter θᵢ contributing to representation dimension dᵢ, the gradient magnitude satisfies:

\[ \|\nabla_{\theta_i} \mathcal{L}_{\text{total}}\|_2 \geq \alpha_i \cdot \|\nabla_{\theta_i} \mathcal{L}(f_i(x), y)\|_2 \]

This ensures that smaller models receive sufficient gradient signal during training.

Layer-wise Mathematical Operations

1. Nested Feed-Forward Networks

The feed-forward network in each transformer layer is modified to support nested computation:

\[ \text{FFN}^{(i)}(x) = \max(0,\ x W_1^{(i)} + b_1^{(i)}) W_2^{(i)} + b_2^{(i)} \]

where \(W_1^{(i)} \in \mathbb{R}^{d_i \times d_{\text{mid}}}\) and \(W_2^{(i)} \in \mathbb{R}^{d_{\text{mid}} \times d_i}\) are the weight matrices for the \(i\)-th nesting level.

2. Layer Normalization Adaptation

Layer normalization is applied independently at each nesting level:

\[ \text{LayerNorm}^{(i)}(x) = \gamma_i \cdot \frac{x - \mu_i}{\sigma_i} + \beta_i \]

where \(\mu_i\) and \(\sigma_i\) are computed over the first \(d_i\) dimensions.

3. Positional Encoding

Positional encodings are extended to support nested dimensions:

\[ \text{PE}^{(i)}(\text{pos}, 2j) = \sin\left(\frac{\text{pos}}{10000^{\frac{2j}{d_i}}}\right) \] \[ \text{PE}^{(i)}(\text{pos}, 2j+1) = \cos\left(\frac{\text{pos}}{10000^{\frac{2j}{d_i}}}\right) \]

for \(j \in [0, \frac{d_i}{2})\)

Optimization Considerations

1. Learning Rate Scheduling

Different nesting levels may require different learning rates. The adaptive learning rate is:

\[ \eta_i = \eta_0 \cdot \sqrt{\frac{d}{d_i}} \cdot \lambda_i \]

where \(\lambda_i\) is a level-specific scaling factor.

2. Regularization

Regularization is applied to encourage similarity between nested representations:

\[ \mathcal{L}_{\text{reg}} = \sum_{i=1}^{k-1} \beta \cdot \| h^{(l,i+1)}[:d_i] - h^{(l,i)} \|_2^2 \]

This term encourages consistency across different scales.

Theoretical Analysis

1. Approximation Theory

The approximation error for a nested model of dimension dᵢ is bounded by:

\[ |f(x) - f_i(x)| \leq C \cdot \sqrt{\frac{d - d_i}{d}} \cdot \|x\|_2 \]

where \(C\) is a problem-dependent constant.

2. Generalization Bounds

The generalization bound for nested models follows:

\[ P\left(|R(f_i) - \hat{R}(f_i)| > \varepsilon\right) \leq 2 \exp\left(-\frac{2n \varepsilon^2}{d_i/d}\right) \]

where \(R(f_i)\) is the true risk and \(\hat{R}(f_i)\) is the empirical risk.

Implementation Considerations

1. Memory Efficiency

The memory footprint scales with the largest model while enabling inference at multiple scales:

\[ \text{Memory} = O(d \cdot L) \quad \text{where } L \text{ is the number of layers} \]

2. Computational Flexibility

The inference cost can be dynamically adjusted based on computational budget:

\[ \text{FLOPs}^{(i)} = O(d_i^2 \cdot L \cdot N) \]

where \(N\) is the sequence length.

Applications and Extensions

1. Adaptive Inference

The mathematical framework enables adaptive inference where the model can exit early based on confidence measures:

\[ \text{Exit\_Condition} = P(\hat{y}_i \mid x) > \tau_i \]

where \(\tau_i\) is a confidence threshold for level \(i\).

2. Distillation Integration

Knowledge distillation can be integrated into the nested framework:

\[ \mathcal{L}_{\text{distill}} = \sum_{i=1}^{k-1} \gamma \cdot \text{KL}\left(\text{softmax}\left(\frac{z_i}{T}\right),\ \text{softmax}\left(\frac{z_k}{T}\right)\right) \]

where \(z_i\) are the logits from the \(i\)-th level and \(T\) is the temperature parameter.

Conclusion

Matryoshka Transformers provide a mathematically rigorous framework for creating adaptive neural networks with nested computational capabilities. The mathematical foundations ensure efficient training, inference flexibility, and theoretical guarantees on performance across different scales. This architecture represents a significant step toward more efficient and adaptable transformer models for real-world applications with varying computational constraints.

Further Reading

- Progressive Neural Architecture Search

- Adaptive Neural Networks

- Multi-Scale Deep Learning

- Efficient Transformer Architectures